regularization machine learning mastery

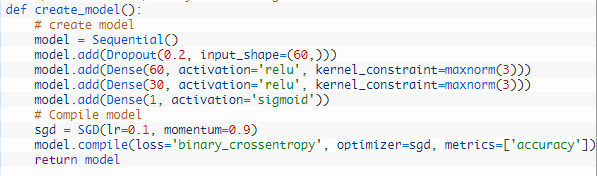

Dropout is a technique where randomly selected neurons are ignored during training. Regularization Dodges Overfitting.

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

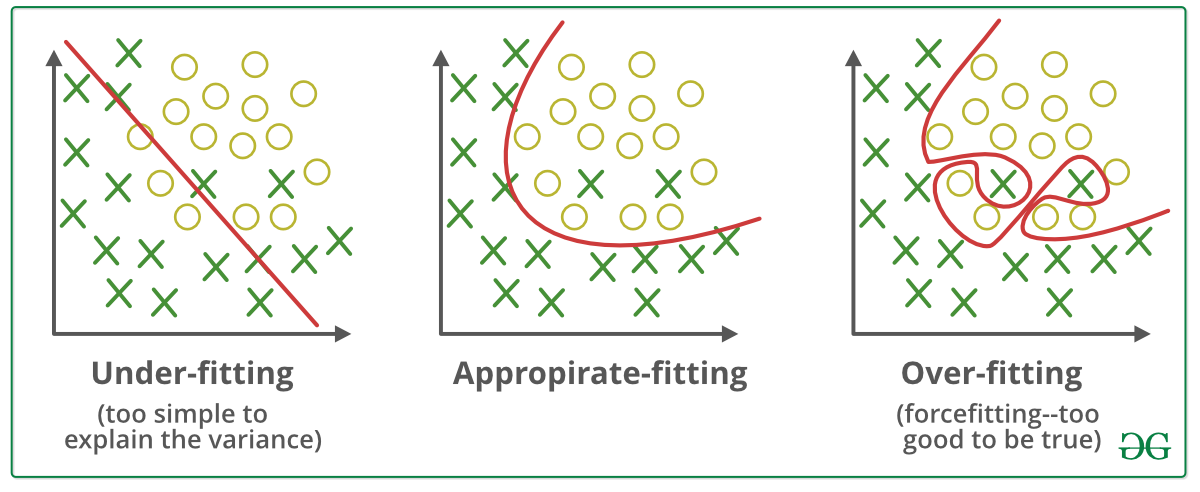

Regularization in machine learning allows you to avoid overfitting your training model.

. This technique prevents the model from overfitting by adding extra information to it. Regularization is a technique to reduce overfitting in machine learning. Regularization is one of the basic and most important concept in the world of Machine Learning.

In the included regularization_ridgepy file the code that adds ridge regression is. Change network complexity by changing the network structure number of weights. Hence the value of regularization terms rises.

In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of. Such data points that do not have the properties of your data make your model noisy. A Simple Way to Prevent Neural Networks from Overfitting download the PDF.

Overfitting happens when your model captures the arbitrary data in your training dataset. It is one of the most important concepts of machine learning. Ad Andrew Ngs popular introduction to Machine Learning fundamentals.

This noise may make your model more. Regularization Terms by Göktuğ Güvercin. X1 X2Xn are the features for Y.

Therefore we can reduce the complexity of a neural network to reduce overfitting in one of two ways. Regularization works by adding a penalty or complexity term to the complex. Regularization is a set of techniques that can prevent overfitting in neural networks and thus improve the accuracy of a Deep Learning model when facing completely new data from the problem domain.

In the above equation Y represents the value to be predicted. Regularization machine learning mastery Monday March 28 2022 Edit. However at the same time the length of the weight vector tends to increase.

One of the major aspects of training your machine learning model is avoiding overfitting. L2 regularization It is the most common form of regularization. In this post lets go over some of the regularization techniques widely used and the key difference between those.

In the case of neural networks the complexity can be varied by changing the. Dropout Regularization For Neural Networks. Adding the Ridge regression is as simple as adding an additional.

Regularization It is a form of regression that constrains or shrinks the coefficient estimating towards zero. It will probably do a better job against future data. Regularization machine learning mastery Thursday February 24 2022 Edit.

Data augmentation and early stopping. Ad Browse Discover Thousands of Computers Internet Book Titles for Less. Everything You Need to Know About Bias and Variance Lesson - 25.

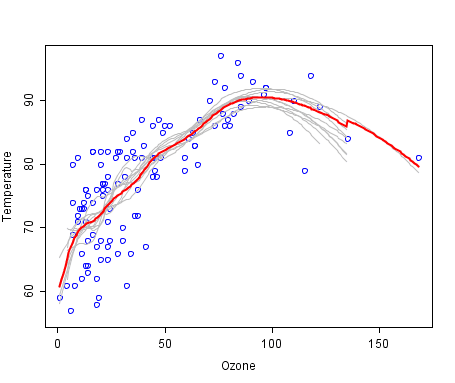

In Figure 4 the black line represents a model without Ridge regression applied and the red line represents a model with Ridge regression appliedNote how much smoother the red line is. It is a form of regression that shrinks the coefficient estimates towards zero. Activity or representation regularization provides a technique to encourage the learned representations the output or activation of the hidden layer or layers.

While optimization algorithms try to reach global minimum point on loss curve they actually decrease the value of first term in those loss functions that is summation part. Regularization works by adding a penalty or complexity term to the complex model. Dropout is a regularization technique for neural network models proposed by Srivastava et al.

β0β1βn are the weights or magnitude attached to the features. Lets consider the simple linear regression equation. Optimization function Loss Regularization term.

In their 2014 paper Dropout. You should be redirected automatically to target URL. Regularization is essential in machine and deep learning.

Change network complexity by changing the network parameters values of weights.

Regularization In Machine Learning Simplilearn

Linear Regression For Machine Learning

Overview Of The Artificial Intelligence Methods And Analysis Of Their Application Potential Springerlink

A Tour Of Machine Learning Algorithms

Machine Learning Mastery With Weka Pdf Machine Learning Statistical Classification

What Role Does Regularization Play In Developing A Machine Learning Model When Should Regularization Be Applied And When Is It Unnecessary Quora

![]()

Machine Learning Mastery Workshop Enthought Inc

![]()

Start Here With Machine Learning

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube

What Is Regularization In Machine Learning

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube

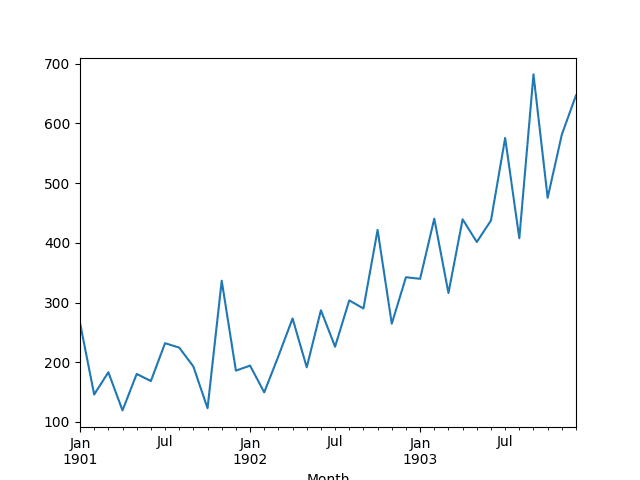

Weight Regularization With Lstm Networks For Time Series Forecasting

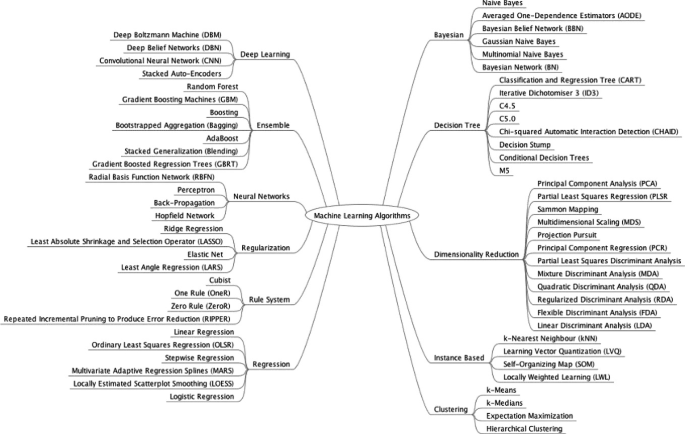

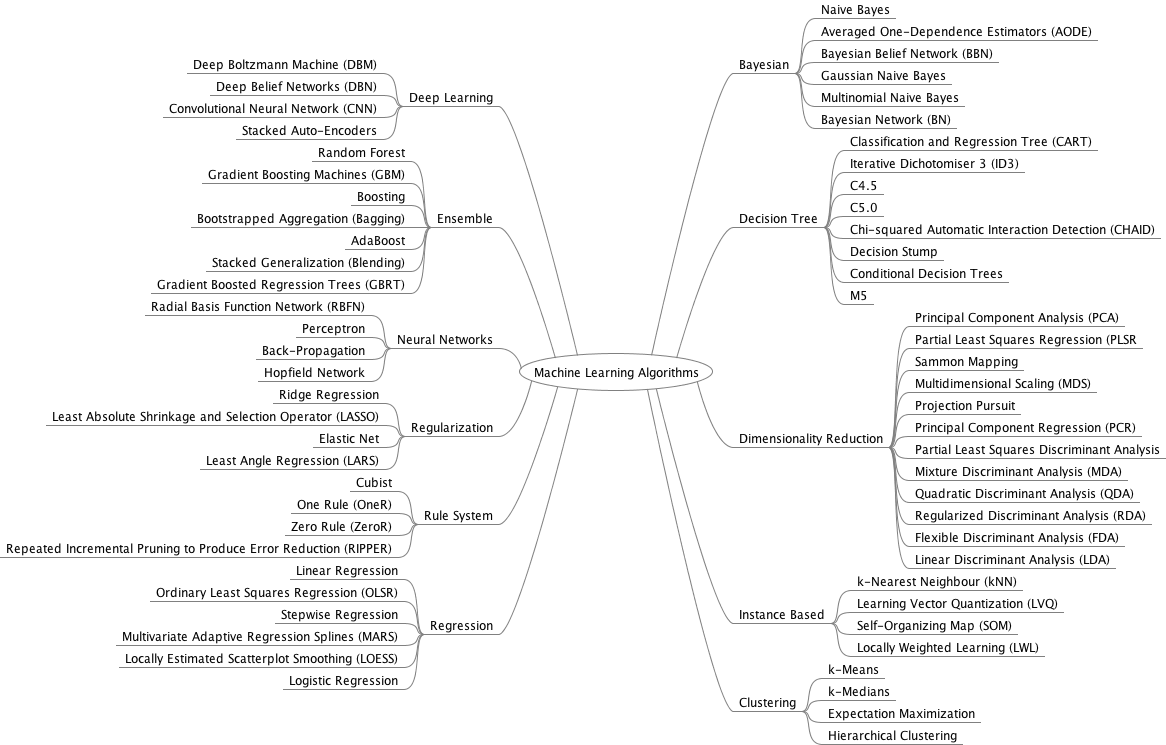

A Tour Of Machine Learning Algorithms

Regularisation Techniques In Machine Learning And Deep Learning By Saurabh Singh Analytics Vidhya Medium

Machine Learning Algorithm Ai Ml Analytics

The Machine Learning Mastery Ebook Bundle Down To 20 From 223 9 Ends In 1 Day Anyone Have Experience With These Books R Learnmachinelearning

Types Of Machine Learning Algorithms By Varun Ravi Varma Pickled Minds Medium